Imagine you’re going to visit the “big AI boss,” the one that controls all kinds of systems: the post office, utilities, government systems, business operations, etc. You walk into the command room and there it is, one big beautiful brain, as it were, pulsating away in vibrant color while a set of wires connects its body to the network.

Okay, I’ll pull the curtain on this scintillating vision, because according to quite a few experts, this is not what you’re going to see with general artificial intelligence.

Instead, as we near the singularity, some of our best minds argue that you’re likely to see something that looks more like what you would have if you visualized the Internet: something’s happening over here, and something else is happening over here, and there are efficient conduits connecting everything together in real time. It appears chaotic when viewed up close, yet the system functions with the elegance and precision of a Swiss watch – complex, dynamic, and remarkably coherent.

If you read this blog with any regularity, you’re probably tired of me referencing one of our great MIT people, Marvin Minsky, and his book, Society of the Mind, in which he pioneers this very idea. Minsky said, after much contemplation and research, that the human brain, despite being a single biological organ, is not one computer, but a series of many different “machines” hooked up to one another.

You might argue that this is a semantic idea: because we know, for example, how the cortex works, how the two halves of the brain coordinate, and the role of sub-organs like the amygdala. But that’s not all that Minsky gave us in his treatise: he helped to introduce the idea of “k-lines” or knowledge lines, the trajectories by which we remember things. Think of the next-hop journeys of packets along the Internet – there are similarities there.

Minsky also explored what some describe as the immanence of meaning—the notion that meaning isn’t embedded in data itself, but instead emerges from the act of interpretation. This, to me, feels profoundly Zen. Look up “immanence” and you’ll find it’s often seen as the opposite of transcendence: not rising above, but diving deep within. Interestingly, there’s rigorous cognitive science that echoes this philosophy, especially in the popular learning theory known as Constructivism, which posits that we don’t passively absorb knowledge, but actively construct it through experience.

That makes a lot of sense, and I would argue, it gives us another useful lens with which to look at AI. When people argue about whether AI is “real” or “sentient” – I would say that in some ways, it’s the ripples from the rock that are more real than the rock itself (to use a physical metaphor) – that the “reality” of AI is in how we process its products.

To be fair, as agents evolve, they’re going to get pretty real in other ways, too. They’ll be doing things and manipulating systems 24/7, getting into whatever they can get their digital hands on.

That’s where I wanted to cover a presentation given by Abhishek Singh at IIA in April. Here, Singh talks about our likely reaction to new digital “species” of intelligence in a pretty compelling way.

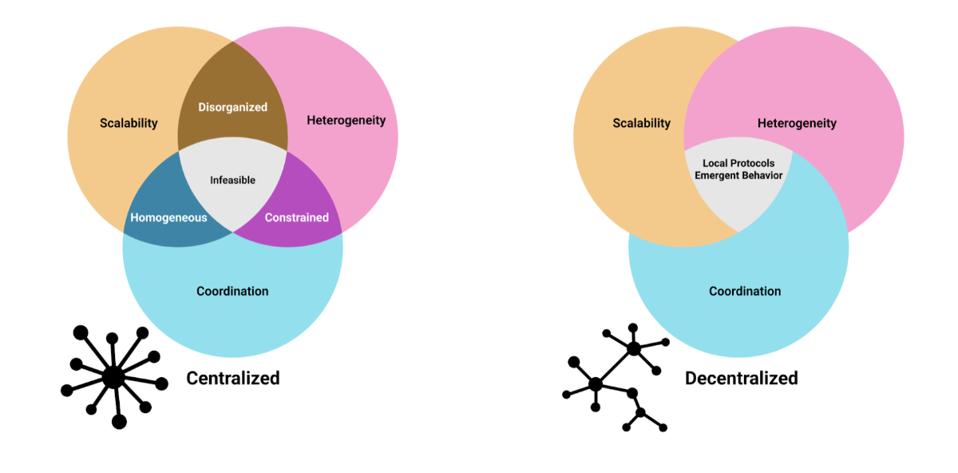

Singh describes what he calls an 'intelligence trilemma' - a fundamental tension between three desirable qualities that systems rarely achieve simultaneously. The first is scalability: can the system work effectively as it grows larger? The second is coordination: can different parts work together smoothly? The third is heterogeneity: can individuals or components take on specialized, different roles?

To illustrate this tension, Singh points to examples from nature. Consider how different species organize themselves. Ant colonies achieve remarkable scale - millions of individuals working together - with impressive coordination, but individual ants are largely interchangeable, limiting the complexity of tasks they can accomplish.

Wolf packs, by contrast, show high heterogeneity - pack members take on distinct roles as hunters, scouts, or caregivers - and coordinate effectively within their group. But wolf societies don't scale: packs rarely exceed a dozen members.

'What makes human societies unique,' Singh explains, 'is our ongoing attempt to balance all three qualities simultaneously, though we still face fundamental trade-offs.'

This mirrors a principle from distributed systems theory (think databases) called the CAP theorem, which states that database systems can only optimize for two out of three desirable properties at once. Singh suggests a similar dynamic governs how intelligent systems - whether animal societies, human organizations, or artificial intelligence networks - organize themselves.

Singh then introduces a new kind of chaos theory, what he calls 'CHAOS theory 2.0' - different from the traditional chaos theory, but rather a framework for understanding how Coordination, Heterogeneity, and Scalability interact in AI systems.

'In centralized systems,' Singh explains, 'when you optimize for two of these qualities, you inevitably sacrifice the third. But operating in a decentralized manner can help bridge these boundaries.'

However, Singh emphasizes that decentralization alone isn't a magic solution. 'You need specific algorithms and protocols that actually enable you to achieve these three goals in a decentralized fashion. We approach this through two key concepts: local protocols and emergent behavior.'

To illustrate, Singh contrasts two approaches to artificial intelligence. The current paradigm resembles 'one big brain sitting at a large tech company, capable of handling all tasks simultaneously.' The alternative is 'many small brains interacting with each other. No single small brain is powerful enough alone, but together, using coordination protocols, they create emergent intelligence that exceeds the sum of their parts.'

Interestingly, Singh notes that even the 'big brain' approach faces the same trilemma, just at a different scale. 'Inside that large neural network, you have millions of parameters coordinating with each other, solving different subtasks - that's where you get heterogeneity. So the trilemma appears fractally, at multiple levels of the system.

He touches on that same idea I mentioned above, that heterogeneity of tasks might be sort of a semantic idea, in that, within that one big brain, lots of different things are happening adjacent to each other. In other words, because of brain anatomy, the brain cells are not entirely fungible.

“This one big brain approach also has this notion of the trilemma, but in a fractal way,” he notes, “where inside that one large neural network, you have lots of parameters - they're coordinating with each other, and they're solving different sub tasks, and that's why you have heterogeneity.”

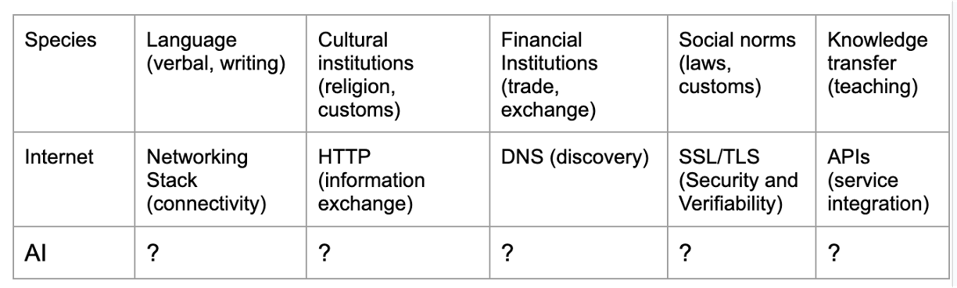

Fig: What does it mean to create protocols for coordination among AI agents. Watch Singh’s talk for the answer.

Singh draws inspiration for CHAOS from how human societies have solved coordination challenges throughout history. "At the species level," he explains, "we have language, cultural institutions, and financial institutions - and prices don't just let you buy things, they convey information about the utility of goods." He points to social norms that allow people to operate within boundaries, and crucially, knowledge transfer systems - "all the institutions we've built to educate people and transfer knowledge across generations." These biological and social coordination mechanisms, Singh argues, offer blueprints for how AI agents might organize themselves.

Watch the part of the video where Singh covers things like financial markets, social mores, and knowledge transfers, and you’ll see more practical application of these ideas to real life. He also brings up the similarities between agent systems and the early Internet, where humans had to game out networking and connection with items like HTTP, SSL, etc. Singh mentions model context protocol, MCP, and sure enough, he drops the acronym NANDA, which represents MIT’s ambitious project to create the internet of AI agents.

Do we need more CHAOS in AI? Watch the video, and let me know.

.png)

.avif)